November 4, 2025

The biggest mistake founders are making in AI

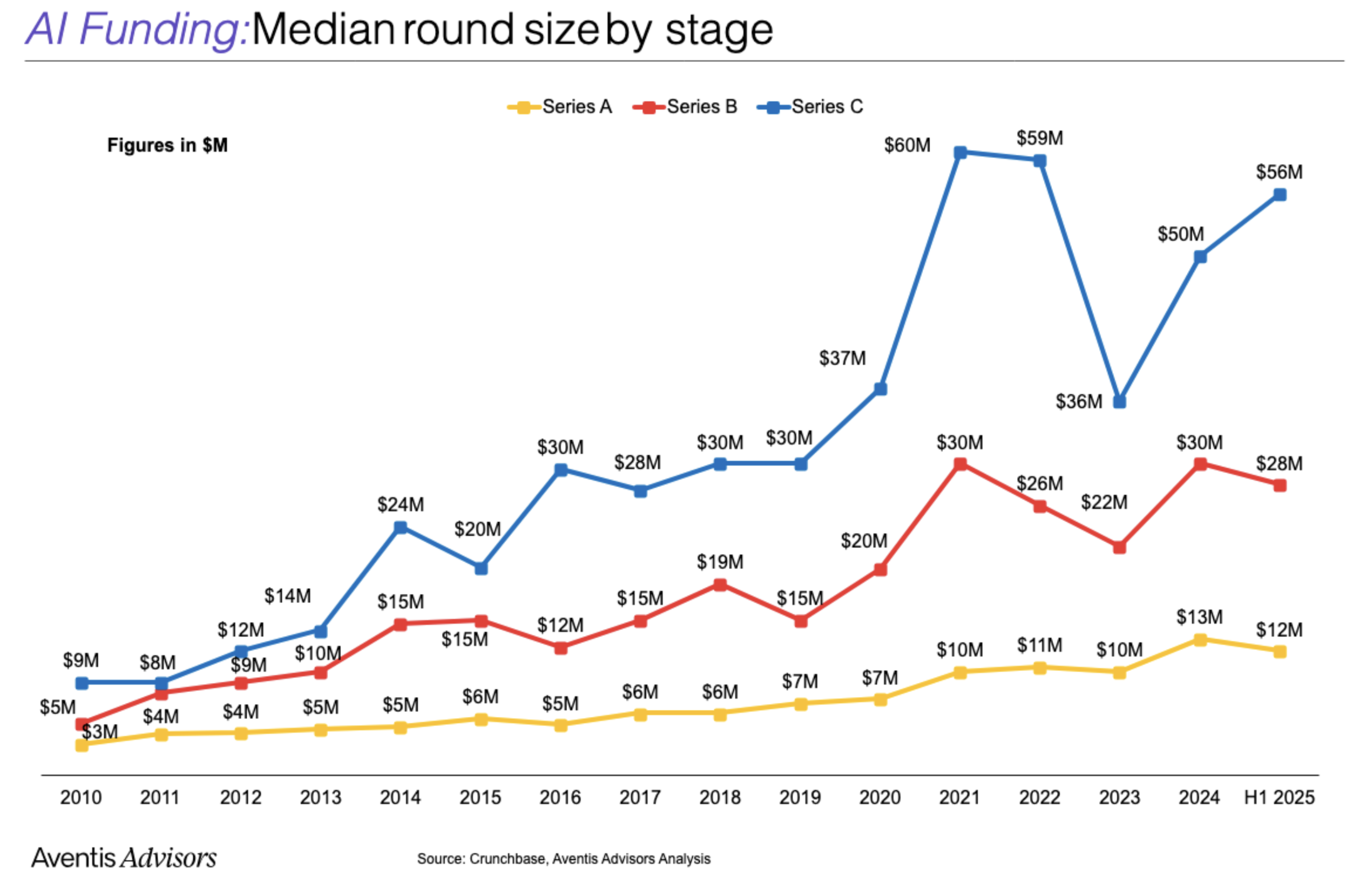

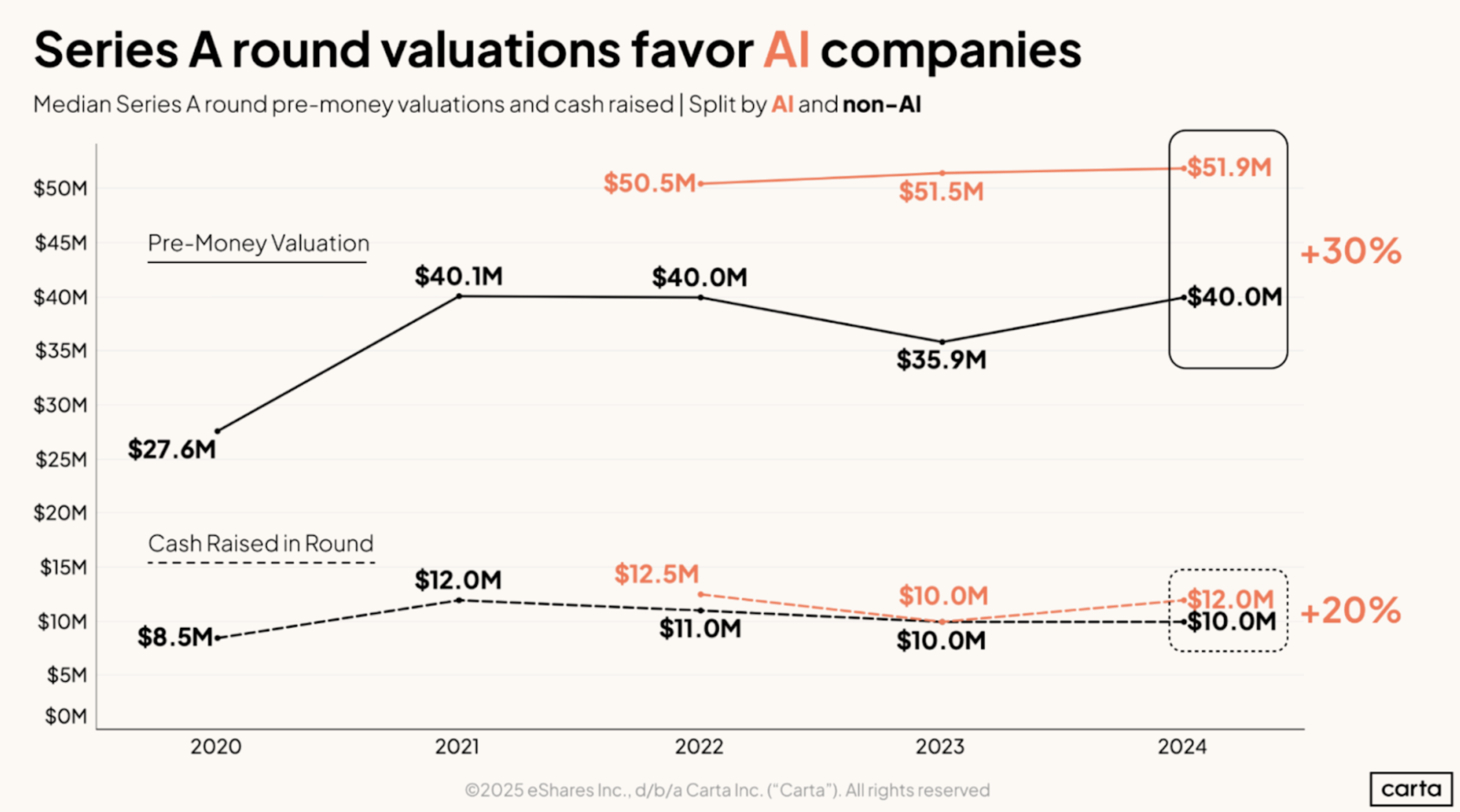

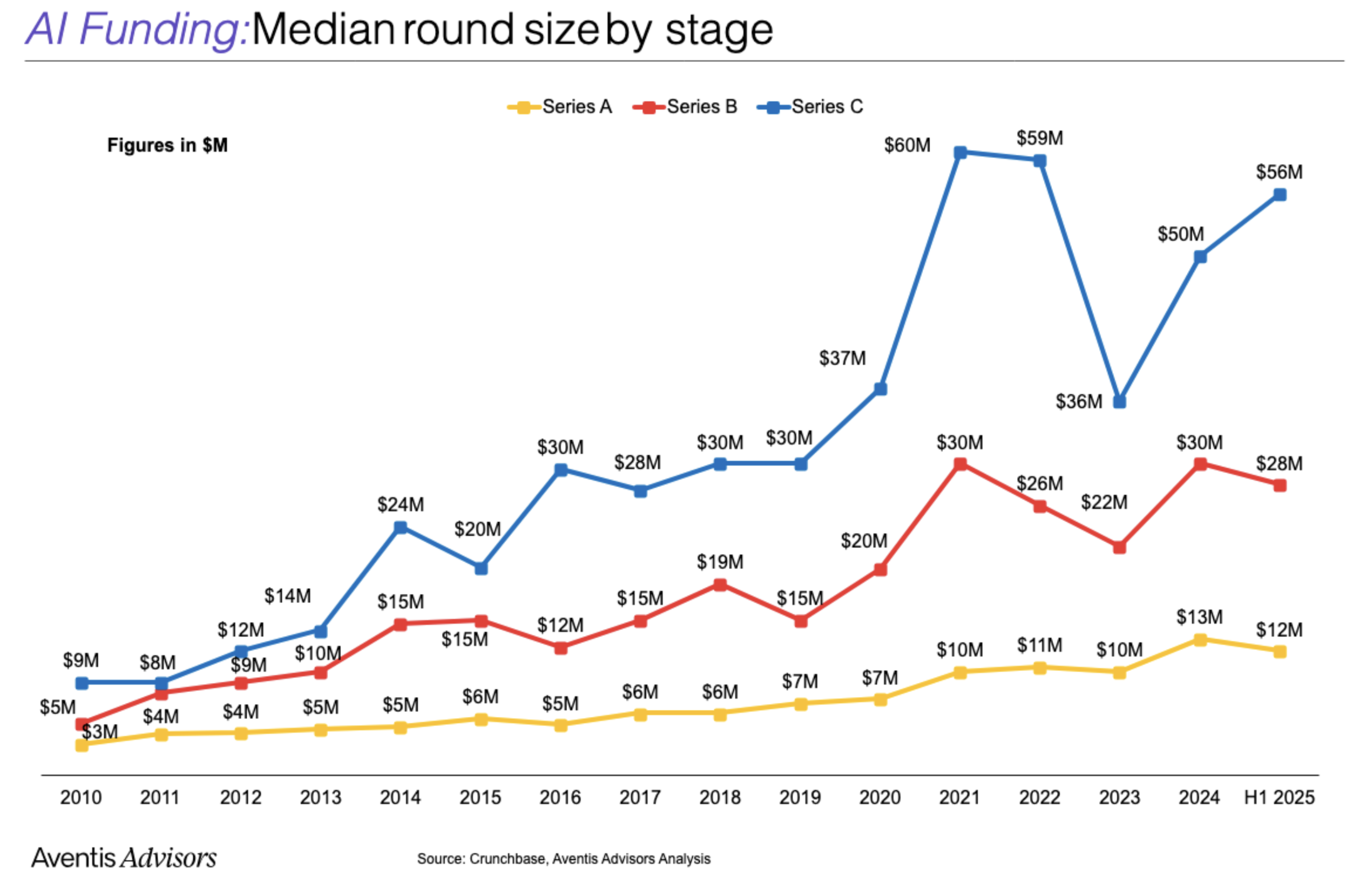

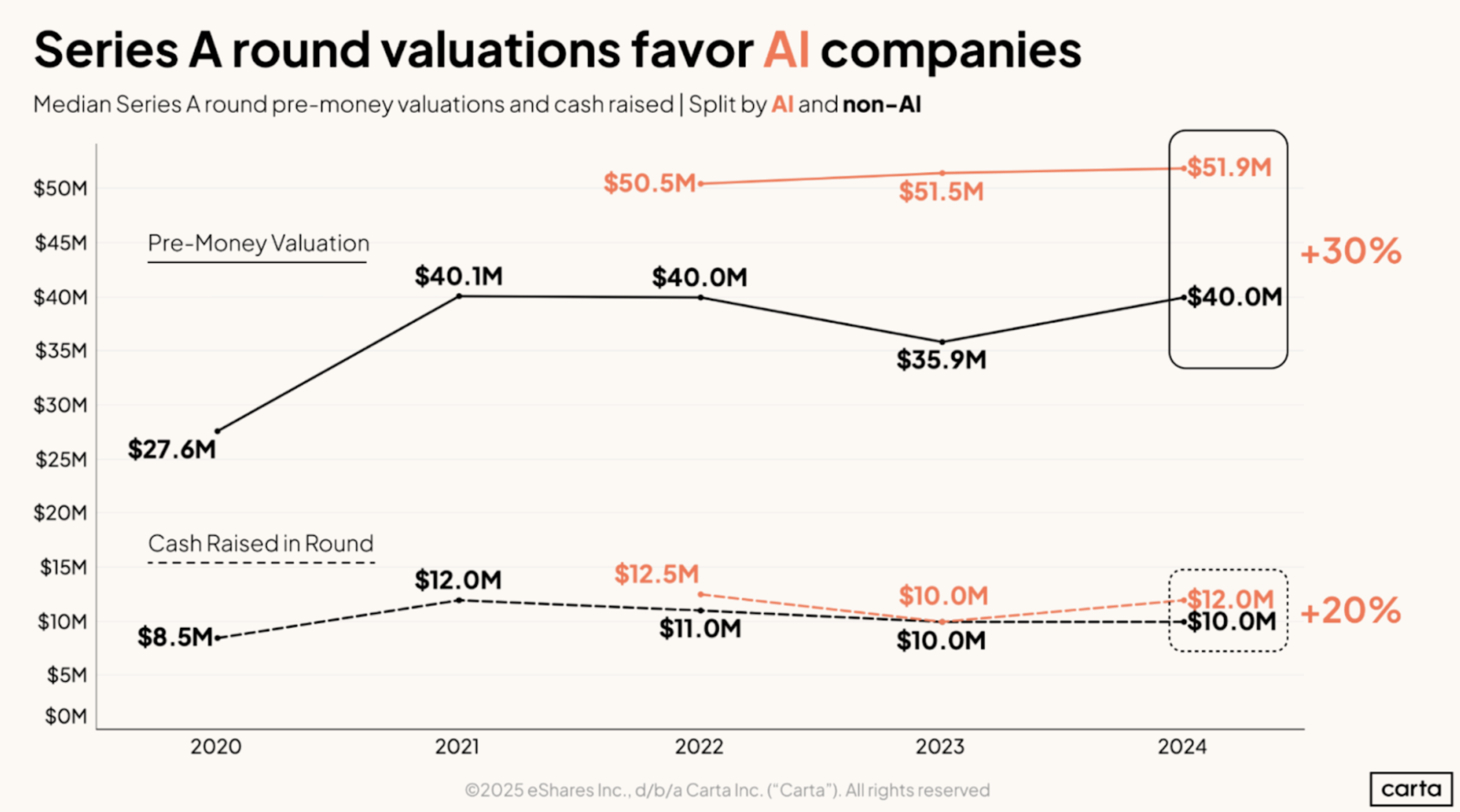

Every company is an AI company and the returns to being an AI company are creating an ever-increasing divide between the haves and have-nots in the venture-backed ecosystem.

When I’m talking to founders, the most important question we’re trying to answer is “How do we make sure we’re perceived as an AI company?” But this recursive Keynesian beauty contest isn’t actually the best way to build a company.

In fact, even in the Age of AI, the old lessons are often still the best. The biggest mistake founders are making is forgetting that scarcity is the most important and enduring source of value.

This insight has been widely understood for a long time and is at the heart of untangling the difference between a moat and a wrapper.

In the age of large language models (LLMs), we see two leading CEOs, Sam Altman at OpenAI and Aravind Srinivas at Perplexity, trying to reframe the question of moats vs. wrappers.

Altman says that the key question is whether your business model gets better or worse as the model gets better. But this just begs the question, OpenAI and other LLM companies are each competing with each other, which is forcing them to compete vertically as well as horizontally. So Altman’s advice doesn’t help founders figure out whether OpenAI or Claude will create a competing product in the application layer in a way that will up-end their business.

Srinivas on the other hand waves away the question, arguing that everything is a wrapper: VC is a wrapper on other people’s money, LLMs are wrappers on Nvidia chips and server racks. But methinks he doth protest too much, even though at some level he’s right – execution is the moat.

Today, we’re seeing that many founders are confusing two fundamental questions about moats and wrappers.

- Moats answer the question: What is easy for you that will be hard for someone else?

- Wrappers answer the question: What is easy now because of AI that used to be hard?

Many founders mistake the latter for the former. “Discovering” that AI can assist with marketing copy or drafting a memo, while helpful, isn’t a significant enough insight to build a lasting business around. In contrast, consistently controlling an agentic workflow through a multi-step, auditable and regulated business process demands a complex combination of engineering, product design and sales skills that many teams simply lack.

This kind of deep, integrated AI application creates a true moat – something your team can do, but incredibly difficult for competitors to replicate.

Of course, the LLM capabilities are moving fast so things that seem differentiated today might end up not being differentiated tomorrow. Moreover, as much as the venture world is already deep on agentic workflows, most businesses are barely scratching the surface of what’s possible and are still very worried about predictability, data leakage and security vulnerabilities.

So, in a world that is already saturated with hyperbolic claims by VCs and founders, how do we discern genuine innovation from mere buzzword bingo? What does it take to be considered an “AI-first company” and make yourself fundable in this environment.

On the product side, we’re witnessing an explosion of “wow” features. Our company, Airship, is a seed-stage vertical SaaS for the HVAC industry. In their very first launch, the team embedded AI to transcribe and summarize customer calls, then automatically generated personalized proposals. Two years ago, this would have been unthinkable.

These capabilities are “wow” for their customers, but the stickiness and moat for Airship is more likely to come from features that are using AI below the surface, creating deep vertical knowledge bases of what equipment works with others and helping HVAC installers apply the best sales practices from one employee across the company. It’s the combination of these features – both deep and shallow – that are generating 30-50 percent uplift for the technicians using the software.

As companies mature from Seed to Series A, our focus shifts. We’re looking for wildly ambitious products coupled with incredible velocity. The rationale is simple: the moat for individual AI-powered features is rapidly diminishing. What’s cutting-edge today could be commoditized tomorrow.

This makes us cautious about investing in very fast-growing but thin products – for example, a verticalized note taker for one industry might be growing fast, but we’re looking for evidence that this is a wedge into a system of record, marketplace or data moat.

In either case, even at Series A, we expect tangible results. We look for evidence of AI tackling tasks that would be technically difficult for LLMs to perform out-of-the-box – a challenging thing to predict, admittedly. Alternatively, we seek a robust data moat or a “system of record” dynamic that is deeply integrated into the product, providing a sustainable competitive advantage. These elements create a stronger, more defensible position than simply offering a novel AI-powered feature.

The impact of AI on the growth side is far more straightforward to assess. We expect to see real efficiencies reflected in operating margins. This means observing a significant scale impact without a corresponding increase in operating expenses. We can often demo the technology ourselves, pressure-test its claims, and, most importantly, speak to clients to verify if they are experiencing the promised efficiencies. The proof, in this instance, is in the measurable business outcomes.

Almost everyone is incorporating it into some part of their product and definitely everyone is using it for ops/coding/marketing copy, but your business has to create something that is scarce, something that would be hard for someone else to build (or at least hard for someone else to sell). As VCs, we often ask the question, “what is the ‘why now’ for this product?” But in the age of LLMs, that question is too easy. The more important question is, “Why you?”

Every company is an AI company and the returns to being an AI company are creating an ever-increasing divide between the haves and have-nots in the venture-backed ecosystem.

When I’m talking to founders, the most important question we’re trying to answer is “How do we make sure we’re perceived as an AI company?” But this recursive Keynesian beauty contest isn’t actually the best way to build a company.

In fact, even in the Age of AI, the old lessons are often still the best. The biggest mistake founders are making is forgetting that scarcity is the most important and enduring source of value.

This insight has been widely understood for a long time and is at the heart of untangling the difference between a moat and a wrapper.

In the age of large language models (LLMs), we see two leading CEOs, Sam Altman at OpenAI and Aravind Srinivas at Perplexity, trying to reframe the question of moats vs. wrappers.

Altman says that the key question is whether your business model gets better or worse as the model gets better. But this just begs the question, OpenAI and other LLM companies are each competing with each other, which is forcing them to compete vertically as well as horizontally. So Altman’s advice doesn’t help founders figure out whether OpenAI or Claude will create a competing product in the application layer in a way that will up-end their business.

Srinivas on the other hand waves away the question, arguing that everything is a wrapper: VC is a wrapper on other people’s money, LLMs are wrappers on Nvidia chips and server racks. But methinks he doth protest too much, even though at some level he’s right – execution is the moat.

Today, we’re seeing that many founders are confusing two fundamental questions about moats and wrappers.

- Moats answer the question: What is easy for you that will be hard for someone else?

- Wrappers answer the question: What is easy now because of AI that used to be hard?

Many founders mistake the latter for the former. “Discovering” that AI can assist with marketing copy or drafting a memo, while helpful, isn’t a significant enough insight to build a lasting business around. In contrast, consistently controlling an agentic workflow through a multi-step, auditable and regulated business process demands a complex combination of engineering, product design and sales skills that many teams simply lack.

This kind of deep, integrated AI application creates a true moat – something your team can do, but incredibly difficult for competitors to replicate.

Of course, the LLM capabilities are moving fast so things that seem differentiated today might end up not being differentiated tomorrow. Moreover, as much as the venture world is already deep on agentic workflows, most businesses are barely scratching the surface of what’s possible and are still very worried about predictability, data leakage and security vulnerabilities.

So, in a world that is already saturated with hyperbolic claims by VCs and founders, how do we discern genuine innovation from mere buzzword bingo? What does it take to be considered an “AI-first company” and make yourself fundable in this environment.

On the product side, we’re witnessing an explosion of “wow” features. Our company, Airship, is a seed-stage vertical SaaS for the HVAC industry. In their very first launch, the team embedded AI to transcribe and summarize customer calls, then automatically generated personalized proposals. Two years ago, this would have been unthinkable.

These capabilities are “wow” for their customers, but the stickiness and moat for Airship is more likely to come from features that are using AI below the surface, creating deep vertical knowledge bases of what equipment works with others and helping HVAC installers apply the best sales practices from one employee across the company. It’s the combination of these features – both deep and shallow – that are generating 30-50 percent uplift for the technicians using the software.

As companies mature from Seed to Series A, our focus shifts. We’re looking for wildly ambitious products coupled with incredible velocity. The rationale is simple: the moat for individual AI-powered features is rapidly diminishing. What’s cutting-edge today could be commoditized tomorrow.

This makes us cautious about investing in very fast-growing but thin products – for example, a verticalized note taker for one industry might be growing fast, but we’re looking for evidence that this is a wedge into a system of record, marketplace or data moat.

In either case, even at Series A, we expect tangible results. We look for evidence of AI tackling tasks that would be technically difficult for LLMs to perform out-of-the-box – a challenging thing to predict, admittedly. Alternatively, we seek a robust data moat or a “system of record” dynamic that is deeply integrated into the product, providing a sustainable competitive advantage. These elements create a stronger, more defensible position than simply offering a novel AI-powered feature.

The impact of AI on the growth side is far more straightforward to assess. We expect to see real efficiencies reflected in operating margins. This means observing a significant scale impact without a corresponding increase in operating expenses. We can often demo the technology ourselves, pressure-test its claims, and, most importantly, speak to clients to verify if they are experiencing the promised efficiencies. The proof, in this instance, is in the measurable business outcomes.

Almost everyone is incorporating it into some part of their product and definitely everyone is using it for ops/coding/marketing copy, but your business has to create something that is scarce, something that would be hard for someone else to build (or at least hard for someone else to sell). As VCs, we often ask the question, “what is the ‘why now’ for this product?” But in the age of LLMs, that question is too easy. The more important question is, “Why you?”